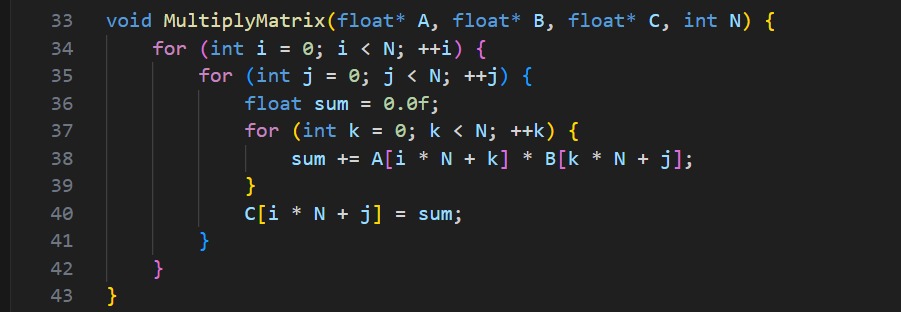

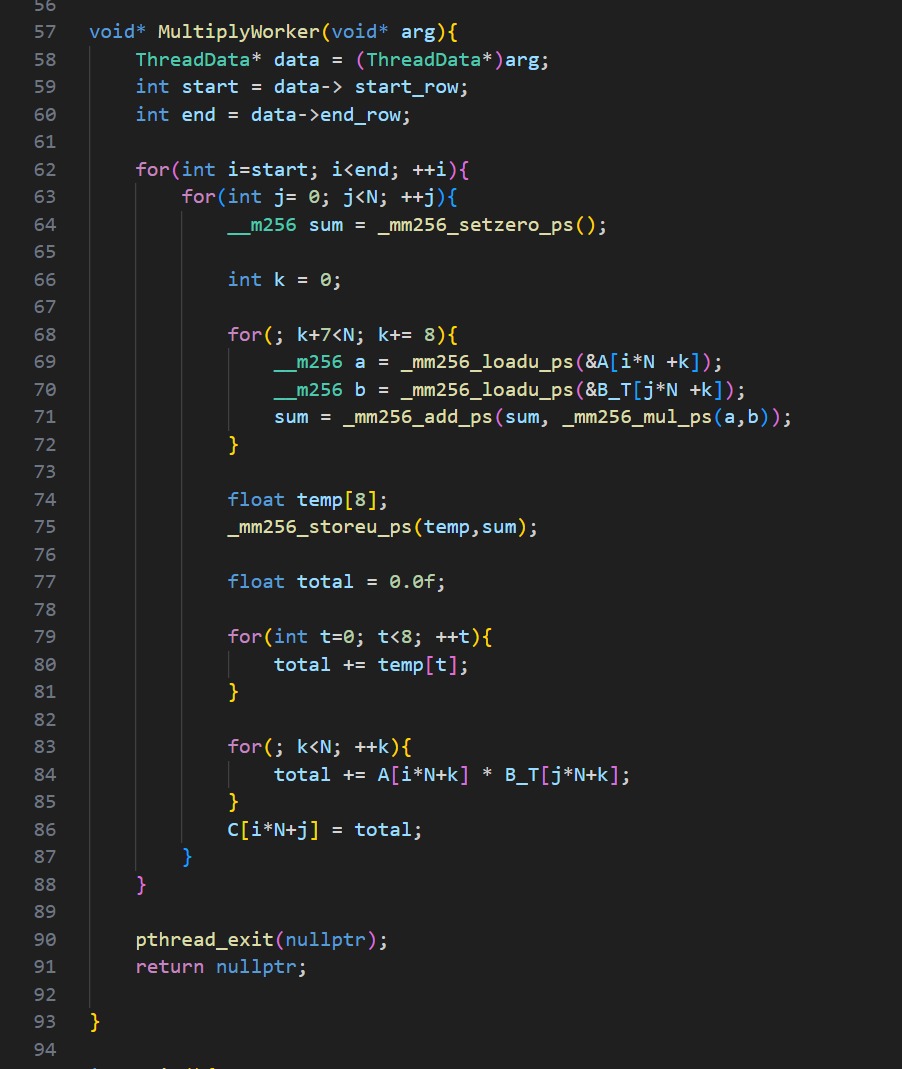

SIMD Optimization Case Study

Row–column dot products computed in parallel lanes

A compact study of matrix multiplication in C++ with a focus on SIMD (AVX).

The page is visual-first and code-light; details are in the report.

Left: dot products executed in parallel lanes. Middle: 32-byte register pipeline. Right: compact die thumbnail.

AVX Core

void* MultiplyWorker(void* arg){

ThreadData* data = (ThreadData*)arg;

int start = data->start_row;

int end = data->end_row;

for (int i = start; i < end; ++i) {

for (int j = 0; j < N; ++j) {

__m256 sum = _mm256_setzero_ps();

int k = 0;

for (; k + 7 < N; k += 8) {

__m256 a = _mm256_loadu_ps(&A[i * N + k]);

__m256 b = _mm256_loadu_ps(&B_T[j * N + k]);

sum = _mm256_add_ps(sum, _mm256_mul_ps(a, b));

}

float temp[8];

_mm256_storeu_ps(temp, sum);

float total = 0.0f;

for (int t = 0; t < 8; ++t) {

total += temp[t];

}

for (; k < N; ++k) {

total += A[i * N + k] * B_T[j * N + k];

}

C[i * N + j] = total;

}

}

pthread_exit(nullptr);

return nullptr;

}Build

```bash g++-O2-mavx-pthread main.cpp-o program